Governance-first blueprint for AI patient intake and triage in GCC health systems

A practical look at deploying intake and triage agents with a generalist LLM and explicit guardrails — supporting Arabic dialects, real-time eligibility checks, and GCC data controls while keeping clinicians in the loop.

Frontier models like GPT-4 or Claude, used under strict guardrails, can safely support multilingual patient intake and triage. ScienceSoft’s field experience shows that there’s a governance-first way to deploy such agents without the costly fine-tuning of a specialized medical model. Here are the main advantages of the proposed pathway for GCC-based providers:

Rapid rollout: AI agents take only a few months to build and require no new hardware.

Models support Arabic dialects and Arabizi.

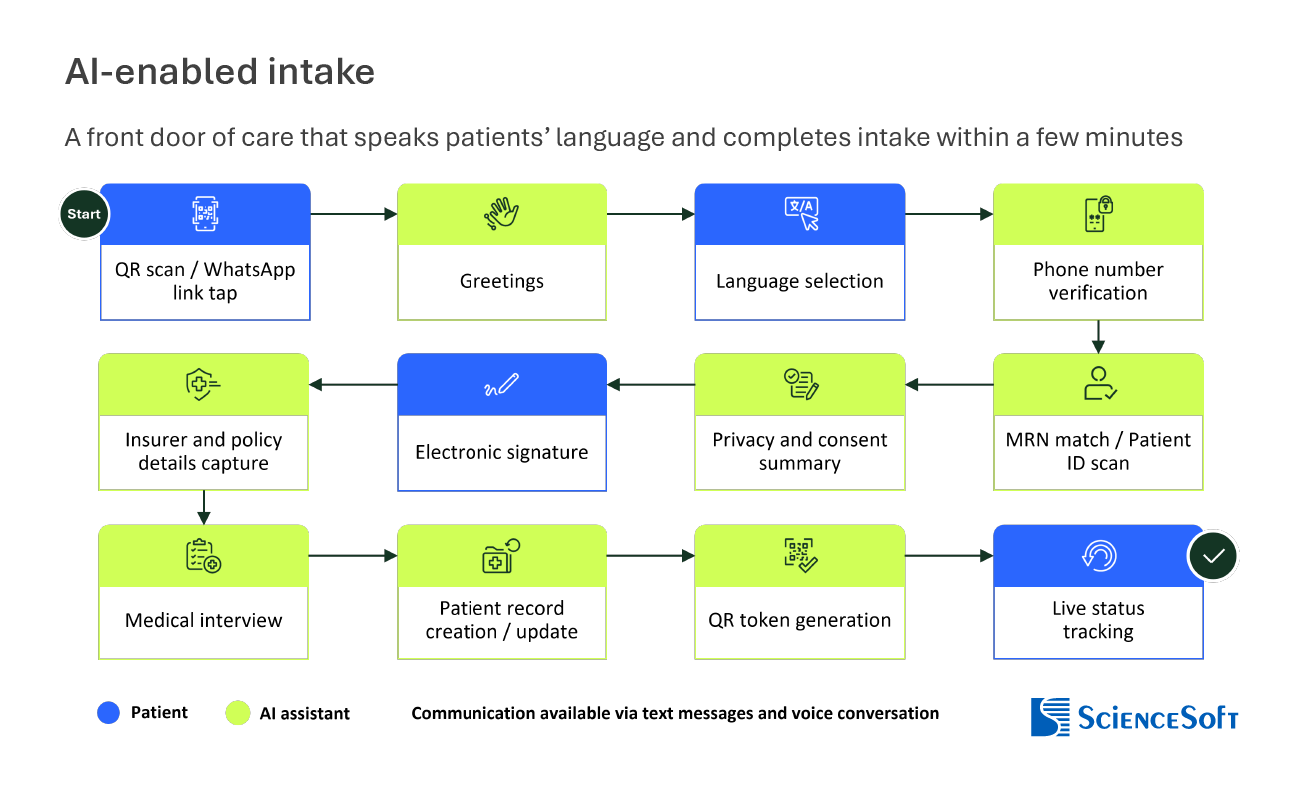

Patients access the tool through a QR code, WhatsApp, or a voice call.

Self-serve intake takes less than 5 minutes.

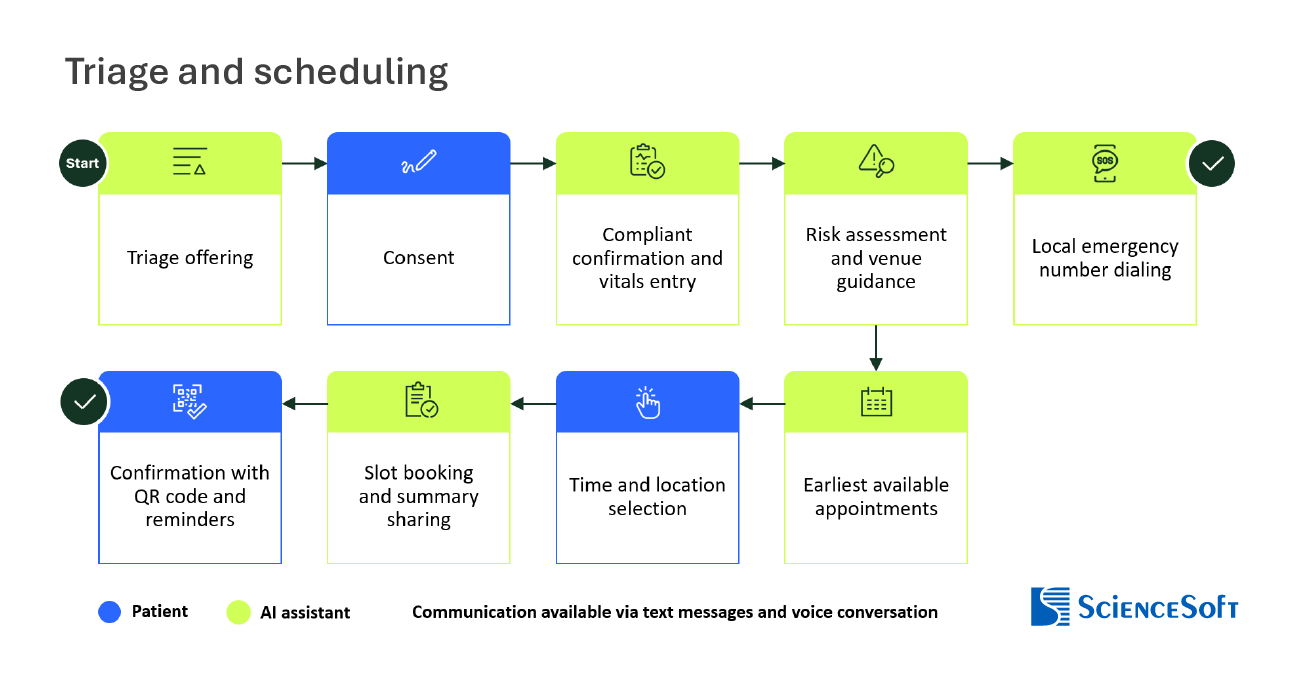

Models accurately identify high-risk situations and immediately escalate patients to human admins or emergency hotlines.

Models can capture insurance and enable real-time eligibility checks before the visit.

AI agents comply with GCC-specific consent, audit, and data controls.

The following Blueprints for AI-enabled intake and triage have been assembled and validated by the team of AI architects at ScienceSoft, a healthcare AI engineering consultancy working closely with private and government healthcare organizations in the UAE and the KSA.

How Generalist LLM Orchestration can Beat Fine-tuning Without Sacrificing Accuracy

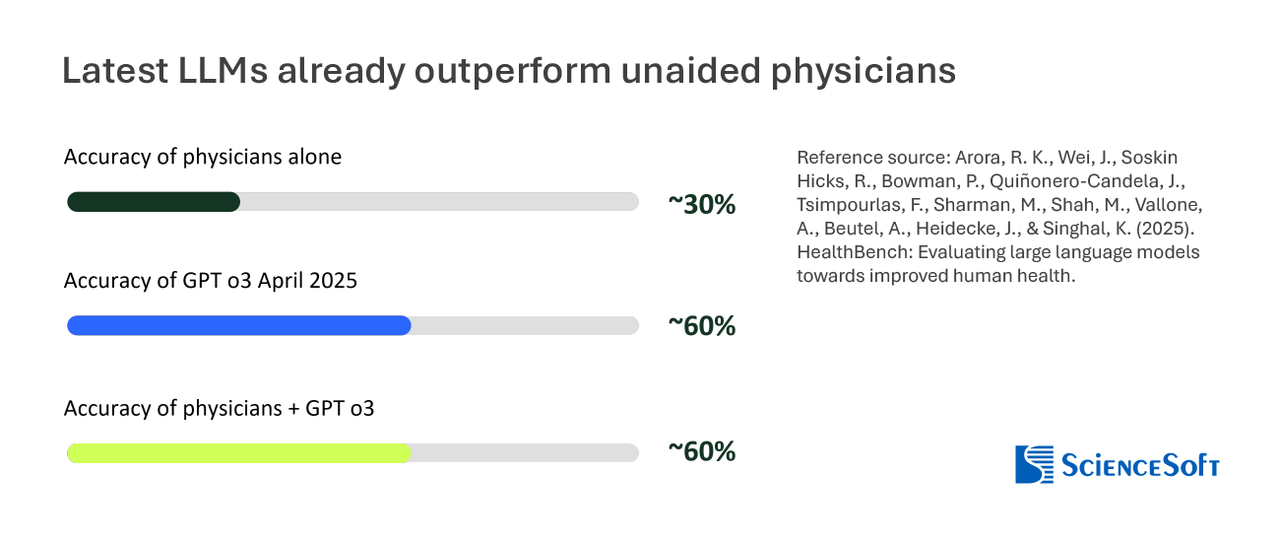

OpenAI’s HealthBench, which recently evaluated 5,000 patient-AI dialogues graded by 262 physicians across 60 countries, confirmed that generalist frontier models can reliably escalate emergencies and maintain coherent reasoning.

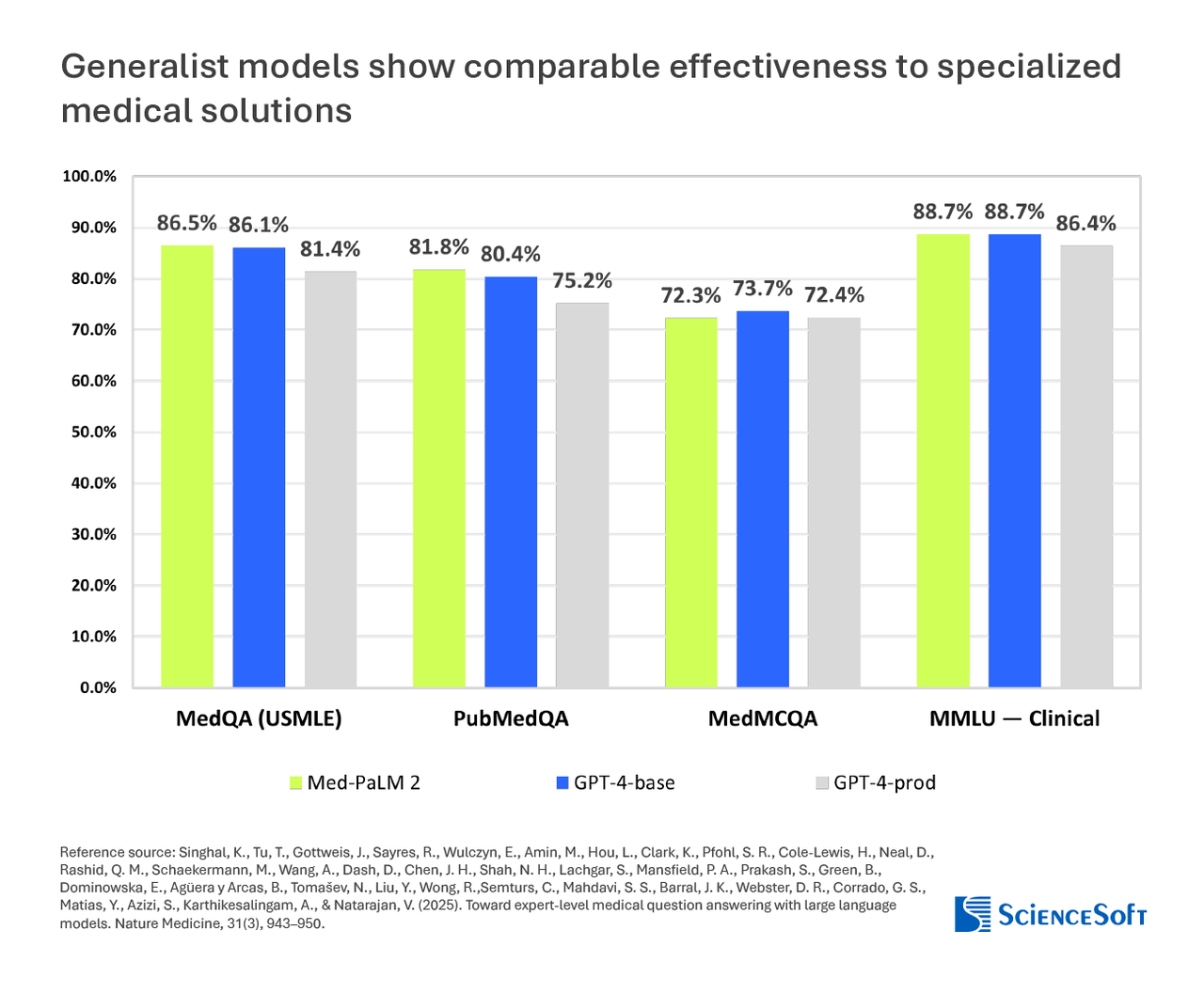

Moreover, a 2025 peer-reviewed study in Nature Medicine shows that custom-trained specialized medical models such as Med-PaLM 2 do not demonstrate significant accuracy gains compared to GPT-4.

Our experts believe that nowadays, costly LLM fine-tuning is warranted only in narrow, high-risk domains like rare disease diagnostics, pediatric triage, or oncology protocol enforcement.

Built-In Arabic Support

Developing a specialized Arabic medical LLM is problematic because licensed Arabic medical texts are scarce, especially for dialects, and the process is slow and expensive.

Instead, ScienceSoft employed a generalist frontier model pretrained on massive multilingual web and media corpora. These models support dozens of languages and dialects and code-switching out of the box, and can be enhanced with orchestration and confidence-based guardrails.

This means that an orchestrated generalist AI model will be able to support both English and Arabic, recognize numerous dialects and Arabizi as well as mixed speech, transcribe voice reliably, generate standardized clinical notes, and pass them directly into scheduling and eligibility workflows without additional middleware.

How Orchestration Affects Deployment Speed and TCO of AI Agents

Orchestration and safety guardrails adapt AI assistants to specific healthcare provider workflows, communication channels, languages, and compliance demands far more effectively than heavy retraining. Skipping fine-tuning cuts months of costly work for data scientists and AI engineers, but also provides broader flexibility in solution deployment, workflows, and adaptation.

Intake and triage agents shown in ScienceSoft’s blueprint require no additional hardware, as patients can access the system from their own devices through a web application or WhatsApp. OTP authentication, consent capture, real-time eligibility checks, and scheduling are delivered through standard modules connected to hospital IT systems. Providing these capabilities with a custom or fine-tuned LLM will demand months of retraining and validation for each workflow change.

Guardrails for Clinical Safety and Compliance

When deploying a generalist AI model for medical triage, guardrails form a separate governance layer around it. Because the safety rules sit outside the LLM, every trigger and handoff is visible, logged, and auditable. That makes risk management transparent and measurable, in contrast to opaque custom-tuned models where safety decisions are buried in the weights. It also allows organizations to adjust policies, add new rules, or adapt to other languages quickly and at lower cost, without retraining or revalidating the base model.

Consultants at ScienceSoft suggest the following guardrails to secure clinical safety, security, and compliance of intake and triage AI agents.

Hard-coded red-flag interrupts: Explicit triggers like pregnancy with bleeding stop the conversation and escalate to staff or emergency instructions.

Abstention thresholds: When confidence is low (e.g., uncertain dialect input), the agent asks clarifiers or hands off the conversation to a human instead of guessing.

Scope walls: The agent only performs intake, summarization, routing, and booking. Diagnosis or prescribing stays strictly out of scope unless explicitly expanded.

Human-in-the-loop: Nurses and administrators review AI-drafted ISBAR summaries, routing, and scheduling. Critical decisions remain under clinician control.

Explainability and clinical audit: Every action includes a rationale and immutable log stored in GCC regions to ensure clinicians and auditors can see why the system acted.

Access controls and tech audit: Least-privilege access is enforced with just-in-time “break-glass” overrides, and every model or API call is logged immutably.

Data minimization and redaction: The agent collects only what is required, auto-detects and masks PHI before model calls, and applies short retention policies.

GCC compliance: The full LLM stack runs in regional data centers across the GCC, with PDPL-compliant consent capture, pseudonymization, and blocked media egress.

References available on request.

WHX Tech

Sep 14, 2026 TO Sep 16, 2026

|Dubai, UAE

Join us at WHX Tech in Dubai—where digital healthcare innovation meets real-world impact. WHX Tech brings together healthcare leaders, tech innovators, and investors to tackle the industry's biggest challenges and shape the future of healthcare.